Faster Matrix Multiplication Algorithm

More information on the fascinat-ing subject of matrix multiplication algorithms and its history can be found in Pan 1985 and Burgisser et. Winograd 1971 improved the leading coefficient of its complexity from 6 to 7.

Breakthrough Faster Matrix Multiply

The techniques can also be simulated within the group theoretic method of Cohn and Umans CU03 CKSU05 CU13.

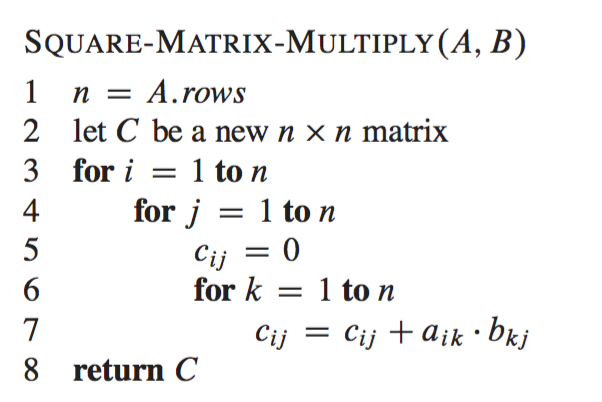

Faster matrix multiplication algorithm. SIAM News Nov 2005 by Sara Robinson. Fast algorithms for matrix multiplication --- iealgorithms that compute less than ON3 operations--- arebecoming attractive for two simple reasons. Todays software libraries are reaching the core peak performanceie 90 of peak performance and thus reaching the limitats ofcurrent systems.

A sequence of several papers AFLG15 ASU13 BCC17a BCC17b AV18a AV18b Alm19 CVZ19 has given. Or using a fast general-purpose mxn algorithm may produce much slower results than using an optimised 3x3 matrix multiply. Rently fastest matrix multiplication algorithm with a complexity of On238 was obtained by Coppersmith and Winograd 1990.

Many algorithms with low asymptotic cost have large leading coefficients and are thus impractical. Viewed 868 times -2 Recently I have learned about both the Strassen algorithm and the CoppersmithWinograd algorithm independently according to the material Ive used the latter is the asymptotically fastest known matrix multiplication algorithm until 2010. Fast algorithms deploy new algorithmic strategiesnew opportunities thus.

Fast matrix multiplication algorithms have lower IO-complexity than the classical algorithm. There have been many subsequent asymptotic improvements. As It can multiply two n n matrices in 0 n2375477 time.

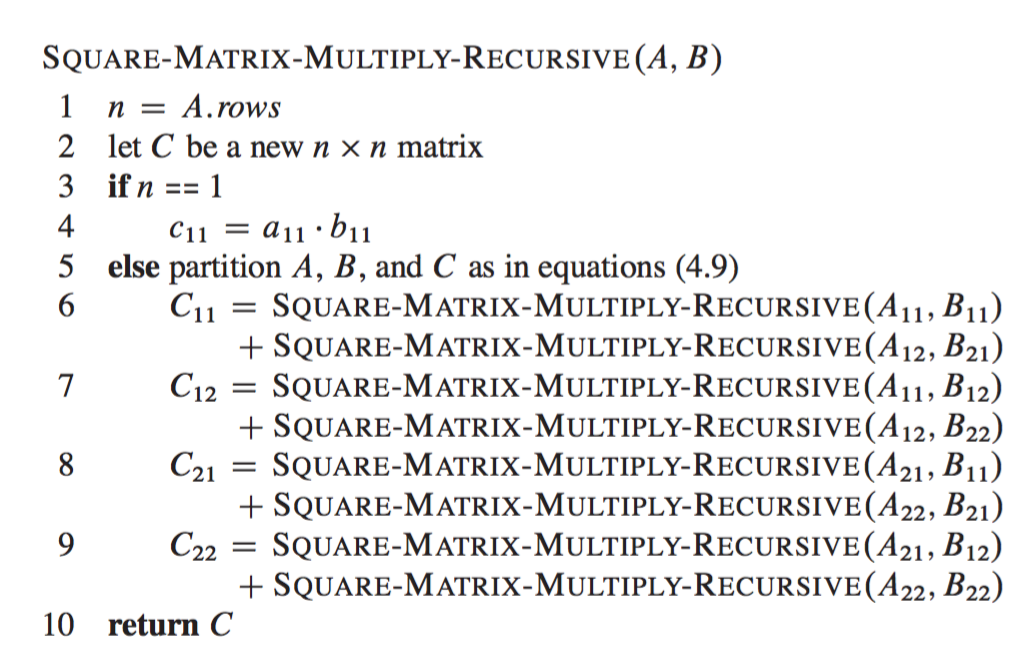

For example the fastest way on your hardware platform may be to use a slow algorithm but ask your GPU to apply it to 256 matrices in parallel. In this paper we devise two algorithms for the matrix multiplication. The idea of fast matrix multiplication algorithms is to performfewer recursive matrix multiplications at the expense of more ma-trix additions.

These algorithms make more efficient use of computational resources such as the computation time random access memory RAM and the number of passes over the data than do previously known algorithms for these problems. That technique called matrix multiplication previously set a hard speed limit on just how quickly linear systems could be solved. Group-theoretic algorithms for matrix multiplication FOCS Proceedings 2005.

At is they communicate asymptoti-cally less data within the memory hierarchy and between proces-sors. All fast matrix multiplication algorithms since 1986 use the laser method applied to the Coppersmith-Winograd family of tensors CW90. It still features in the work but in a complementary role.

Unfortunately most of these have the disadvantage of very large often gigantic hidden constants. Fast and stable matrix multiplication p1344. Karatsubas algorithm again trades one multiplication for.

Found groups with subsets beating the sum of the cubes and satisfying the triple product property. Similarly to the analysis of Strassens algorithm one can show that Nn Onlog 2 3. Fast matrix multiplication algorithms are of practical use only if the leading coefficient of their arithmetic complexity is sufficiently small.

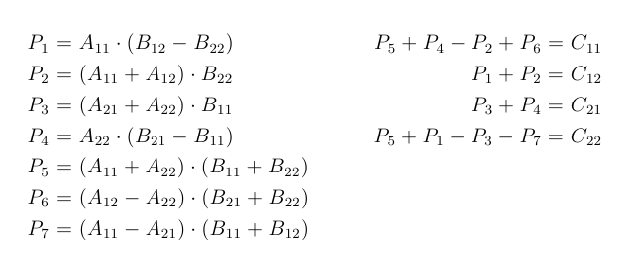

Fast matrix multiplication is still an open problem but implementation of existing algorithms 5 is a more com-mon area of development than the design of new algorithms 6. Since matrix multiplication is asymptotically moreexpensive than matrix addition this tradeoresults in faster algo-rithms. The most well known fast algorithm is due to Strassen andfollows the same block structure.

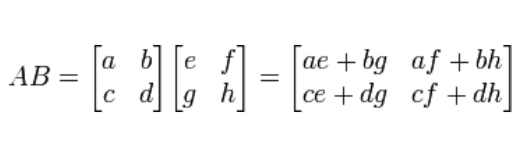

The authors couple it with a new approach that in essence is a form of trained divination. Strassens algo-rithm is an improvement over the naive algorithm in the case of multiplying two 22 matrices because it. The algorithm above gives the following recursive equation Nn3Nn12 1On and N27.

You can guess your way to solutions said Peng. E IO-compleixty is measured as a function of the number of processors P the local memory size M and the matrix dimension n. Strassens algorithm 1969 was the first sub-cubic matrix multiplication algorithm.

Communication Costs Of Strassen S Matrix Multiplication February 2014 Communications Of The Acm

2 9 Strassens Matrix Multiplication Youtube

Toward An Optimal Matrix Multiplication Algorithm Kilichbek Haydarov

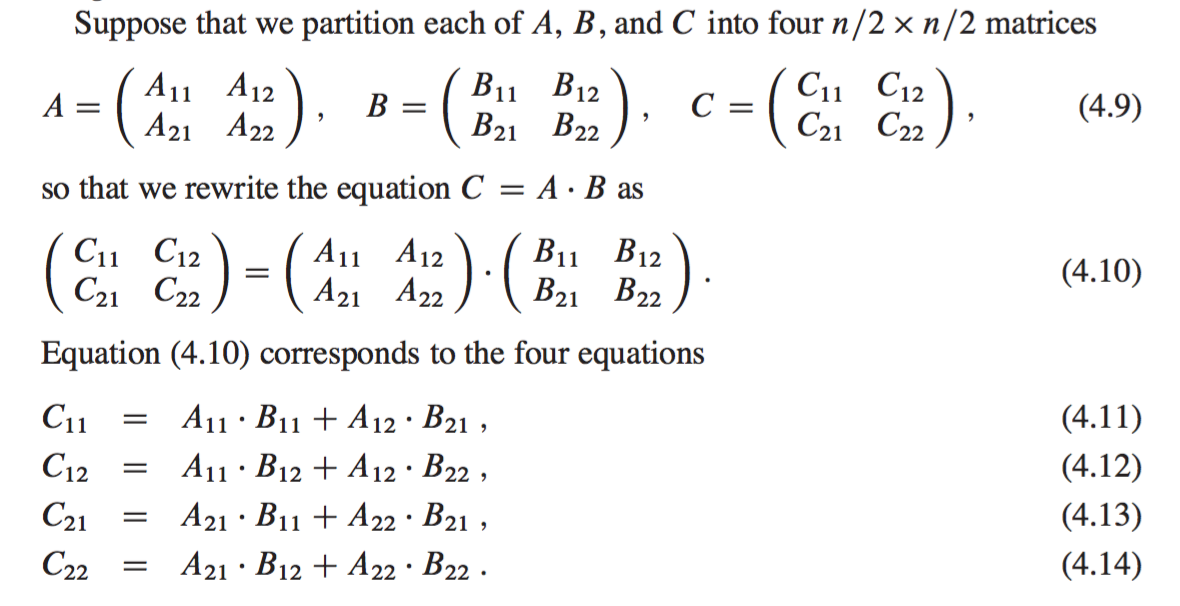

Matrix Multiplication Using The Divide And Conquer Paradigm

Matrix Multiplication Using The Divide And Conquer Paradigm

Fast Context Free Grammar Parsing Requires Fast Boolean Matrix Multiplication

Toward An Optimal Matrix Multiplication Algorithm Kilichbek Haydarov

Strassen Matrix Multiplication C The Startup

Matrix Multiplication Using The Divide And Conquer Paradigm

Strassen S Matrix Multiplication Divide And Conquer Geeksforgeeks Youtube

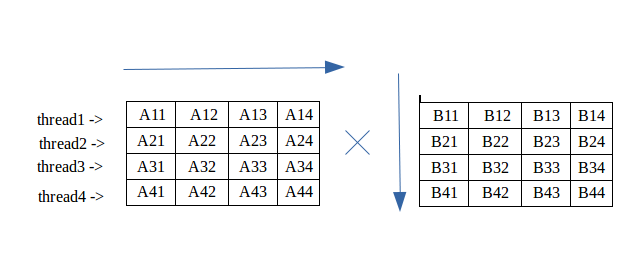

Matrix Multiplication With A Hypercube Algorithm On Multi Core Processor Cluster

Multiplication Of Matrix Using Threads Geeksforgeeks

Toward An Optimal Matrix Multiplication Algorithm Kilichbek Haydarov

What Is The Best Matrix Multiplication Algorithm Stack Overflow

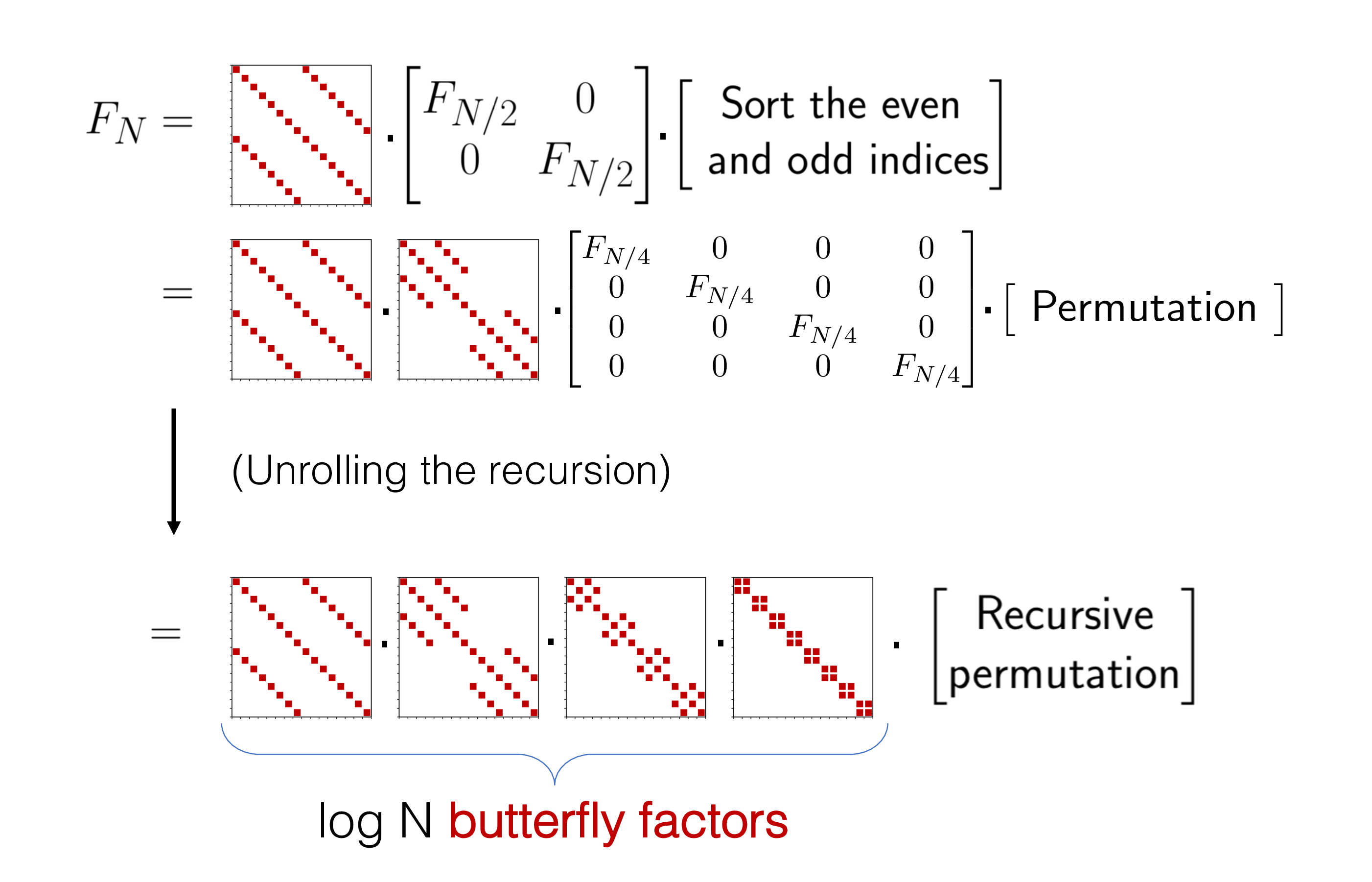

Butterflies Are All You Need A Universal Building Block For Structured Linear Maps Stanford Dawn

Toward An Optimal Matrix Multiplication Algorithm Kilichbek Haydarov

Which Algorithm Is Performant For Matrix Multiplication Of 4x4 Matrices Of Affine Transformations Software Engineering Stack Exchange

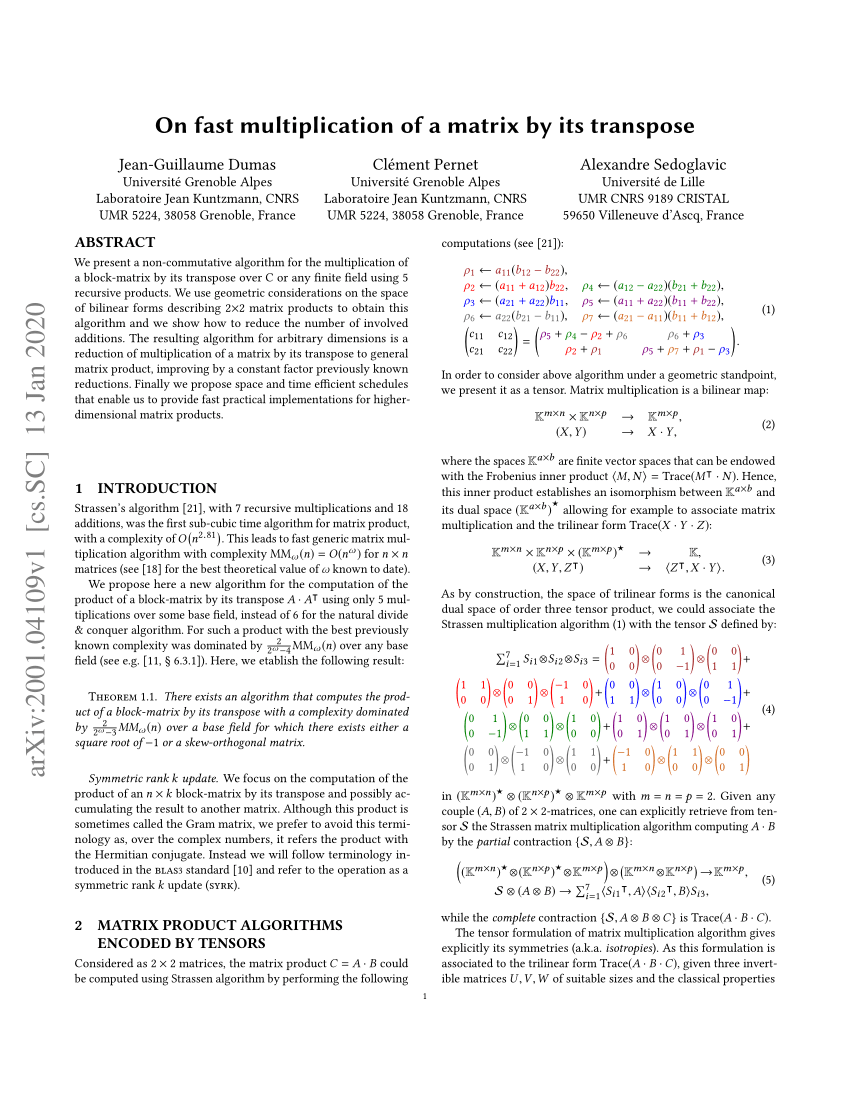

Pdf On Fast Multiplication Of A Matrix By Its Transpose

Karatsuba Algorithm For Fast Multiplication Using Divide And Conquer Algorithm Geeksforgeeks