Matrix Dot Product Pytorch

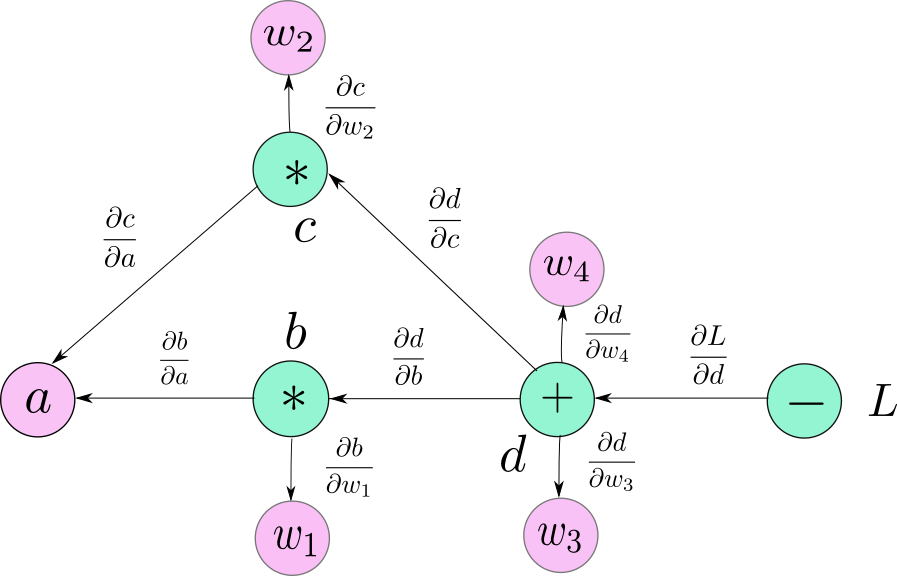

These algorithms are already implemented in pytorch itself and other libraries such as. Inspired by Matt Mazur well work through every calculation step for a super-small neural network with 2 inputs 2 hidden units and 2 outputs.

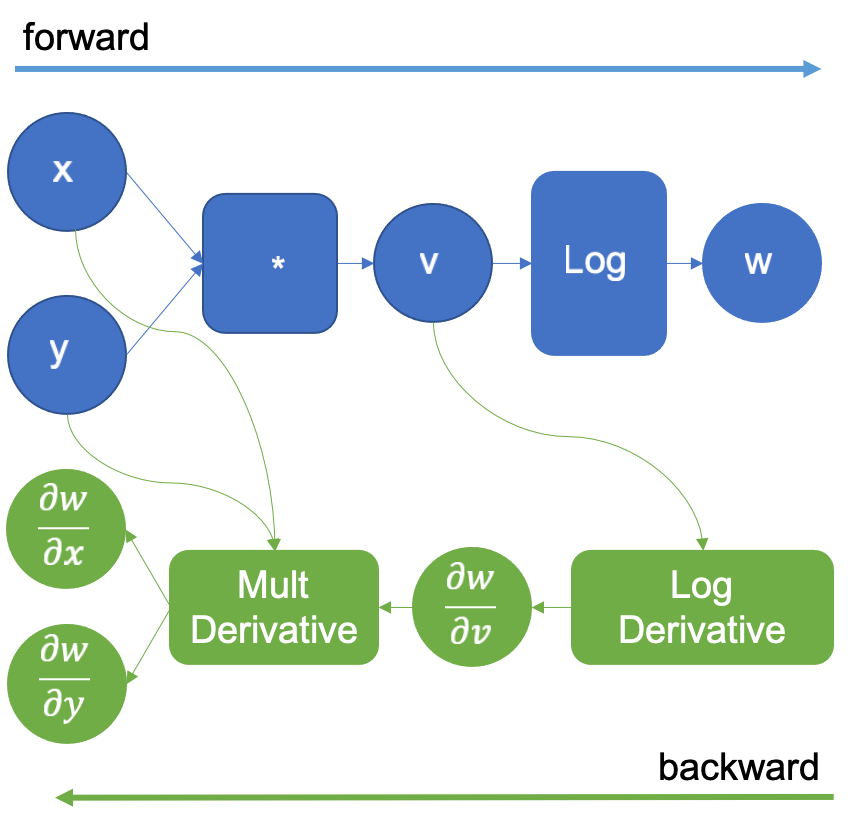

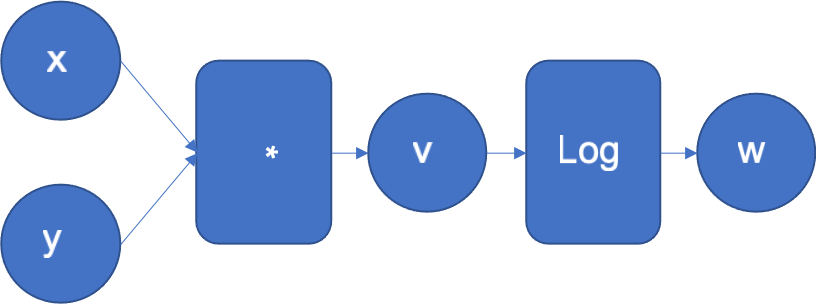

Pytorch Basics Understanding Autograd And Computation Graphs

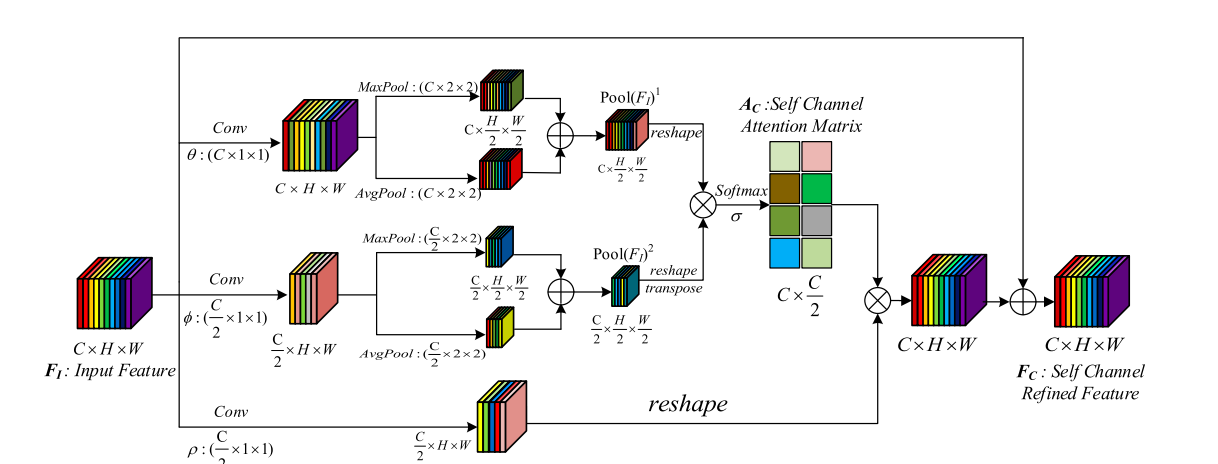

Create linear projections given input X R b a t c h t o k e n s d i m textbfX in Rbatch times tokens times dim X R b a t c h t o k e n s d i m.

Matrix dot product pytorch. For example the dot product is valid if the first matrix has a dimension of 3 2 and the second matrix has a dimension of 2 2. Computes the unweighted degree of a given one-dimensional index tensor. It computes the inner product for 1D arrays and performs matrix multiplication for 2D arrays.

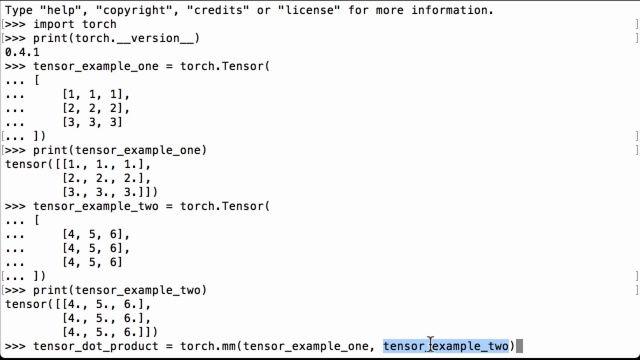

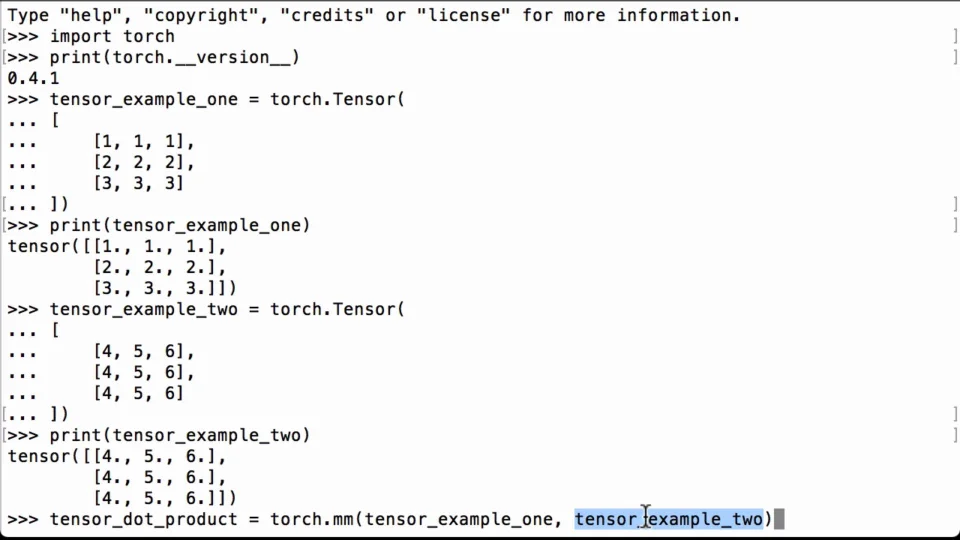

We are using PyTorch 020_4. The 1 tells Pytorch that our embeddings matrix is laid out as num_embeddings vector_dimension and not vector_dimension num_embeddings. The matrix multiplication happens in the d d d dimension.

Unlike NumPys dot torchdot intentionally only supports computing the dot product of two 1D tensors with the same number of elements. By popular demand the function torchmatmul performs matrix multiplications if both arguments are 2D and computes their dot product if both arguments are 1D. Please consider adding a floating point operations calculator for computational graph operations.

Numpys npdot in contrast is more flexible. If both tensors are 1-dimensional the dot product scalar is returned. Bug If you compute the dot product of a column sliced from a larger CUDA matrix with itself using thmm it will produce an illegal memory access.

Because were multiplying a 3x3 matrix times a 3x3 matrix. The point of the entire miniseries is to reproduce matrix operations such as matrix inverse and svd using pytorchs automatic differentiation capability. Computes the dot product of two 1D tensors.

In this video we will do element-wise multiplication of matrices in PyTorch to get the Hadamard product. Wed like to use it for the deep learning models. It computes the inner product for 1D arrays and performs matrix multiplication for 2D arrays.

Tensor_dot_product torchmmtensor_example_one tensor_example_two Remember that matrix dot product multiplication requires matrices to be of the same size and shape. Norm is now a row vector where normi Ei. And technically is the thing well be trying to improve.

We divide each Ei dot Ej by Ej. Matrix operations with pytorch optimizer part 1. We will create two PyTorch tensors and then show how to do the element-wise multiplication of the two of them.

Input Tensor first tensor in the dot product. So the value function VS is a 4040 array and the state-action value function QSA is a 40x40x2 matrix because there are only 2 actions to take. Computes a sparsely evaluated softmax.

But not the other way around. Dot-Product Attention Extending our previous def Inputs. This blog post is part of a 3 post miniseries.

The dot product is over a 1-dimensional input so the dot product involves only multiplication not sum. Based on PyTorchs official. Note that the operator will not perform matrix multiplication rather it will perform elementwise multiplication such as in Numpy.

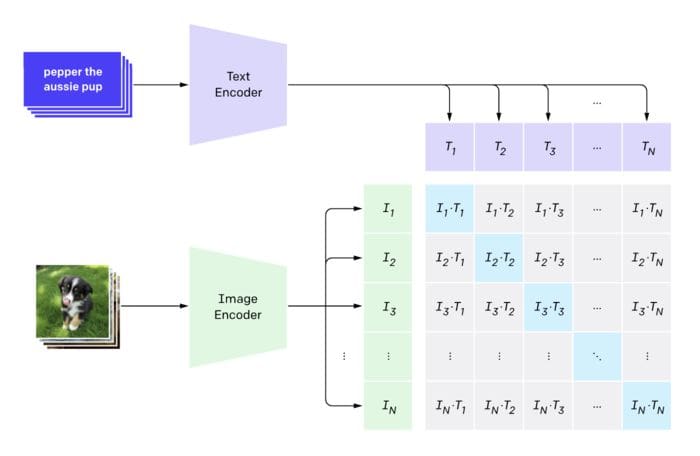

Matrix product of two tensors. Scaled dot product self-attention The math in steps. A query q and a set of key-value k-v pairs to an output Query keys values and output are all vectors Output is weighted sum of values where Weight of each value is computed by an inner product of query and corresponding key Queries and keys have same.

By popular demand the function torchmatmul performs matrix multiplications if both arguments are 2D and computes their dot product if both arguments are 1D. We can now do the PyTorch matrix multiplication using PyTorchs torchmm operation to do a dot product between our first matrix and our second matrix. Notice that were dividing a matrix num_embeddings num_embeddings by a row.

In reality Im actually implementing it so V and Q are each the matrix dot product of a feature vector XSA and a weights vector. CS231n and 3Blue1Brown do a really fine job explaining the basics but maybe you still feel a bit shaky when it comes to implementing backprop. If the first argument is 1-dimensional and the second argument is 2-dimensional a 1 is prepended to its dimension for the purpose of the matrix multiply.

For matrix multiplication in PyTorch use torchmm. If you instead compute v2sum doesnt use CUBLAS it will not produce the ille. Torchdotinput other outNone Tensor.

For matrix multiplication in PyTorch use torchmm. Cc ezyang gchanan zou3519 VitalyFedyunin ngimel. Here were exploiting something called broadcasting.

Numpys npdot in contrast is more flexible. Randomly drops edges from the adjacency matrix edge_index edge_attr with probability p using samples from a Bernoulli distribution. The behavior depends on the dimensionality of the tensors as follows.

TLDR Backpropagation is at the core of every deep learning system. If both arguments are 2-dimensional the matrix-matrix product is returned. First we create our first PyTorch tensor using the PyTorch rand functionality.

For NLP that would be the dimensionality of word embeddings.

Pytorch Matrix Multiplication How To Do A Pytorch Dot Product Pytorch Tutorial

Overview Of Pytorch Autograd Engine Pytorch

Is There An Function In Pytorch For Converting Convolutions To Fully Connected Networks Form Stack Overflow

Linear Algebra Operations With Pytorch Dev Community

Pytorch Matrix Multiplication How To Do A Pytorch Dot Product Pytorch Tutorial

Overview Of Pytorch Autograd Engine Pytorch

Pytorch Matrix Multiplication How To Do A Pytorch Dot Product Pytorch Tutorial

Pytorch Matrix Multiplication Matmul Mm Programmer Sought

Multilingual Clip With Huggingface Pytorch Lightning Kdnuggets

007 Pytorch Linear Classifiers In Pytorch Experiments And Intuition

Vector Jacobian Product Calculation Autograd Pytorch Forums

Pytorch Getting Started With Pytorch

Pytorch Matrix Multiplication Matmul Mm Programmer Sought

Pytorch Matrix Multiplication How To Do A Pytorch Dot Product Pytorch Tutorial

Torch Dot Function Consistent With Numpy Issue 138 Pytorch Pytorch Github

Dot Product Of Two 4d Tensors Vision Pytorch Forums

Pytorch C Frontend Part Ii Inputs Weights And Bias Learn Opencv